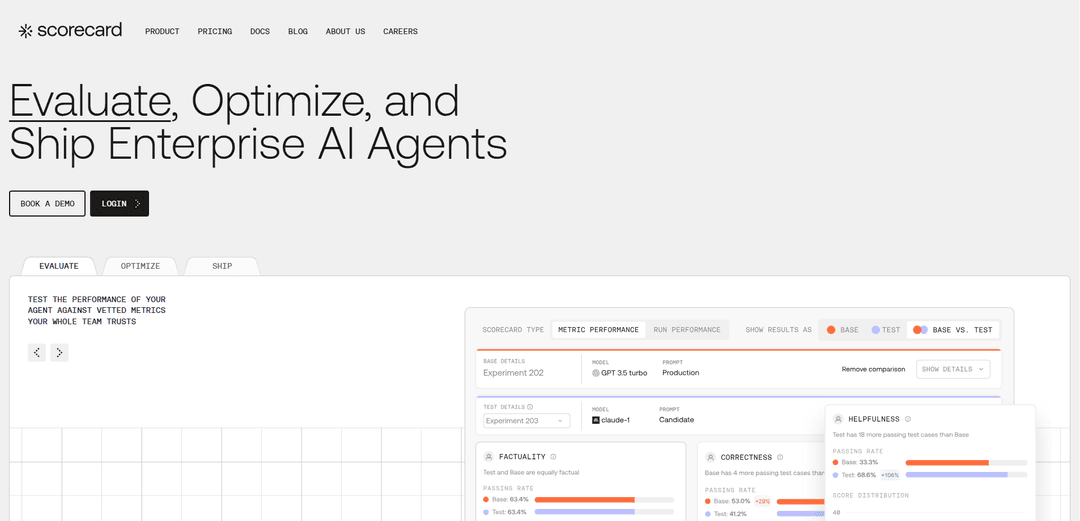

Scorecard

Scorecard is an AI evaluation platform that helps teams build, test, and deploy reliable LLM applications through systematic testing, continuous evaluation, and performance monitoring.

https://scorecard.io/?ref=producthunt

Product Information

Updated:Nov 9, 2025

What is Scorecard

Scorecard is a platform designed to support product teams and engineers in developing and deploying Large Language Model (LLM) applications with confidence. Founded in 2024 and headquartered in San Francisco, the company recently secured $3.75M in seed funding. The platform addresses the challenge of AI unpredictability by providing comprehensive tools for testing, evaluation, and performance monitoring, enabling teams to ship AI products faster and more reliably.

Key Features of Scorecard

Scorecard is a comprehensive evaluation platform designed for testing, validating, and deploying AI agents and LLM applications. It provides tools for continuous evaluation, prompt management, metric creation, and performance monitoring throughout the entire AI development lifecycle. The platform offers features like A/B testing, human labeling for ground truth validation, SDK integration, and a playground environment for quick experimentation, helping teams ship AI products faster and with more confidence.

AI Performance Evaluation: Provides continuous monitoring and evaluation of AI agents with validated metrics library and custom metric creation capabilities

Prompt Management System: Enables version control and storage of prompts with tracking of performance history and team collaboration features

Testing Playground: Offers an interactive environment for quick experimentation and comparison of different AI system versions using real requests

Production Integration: Includes SDK support and tracing capabilities to monitor and debug AI systems in production environments

Use Cases of Scorecard

LLM Application Development: Teams developing language model applications can test, validate, and optimize their models before deployment

Enterprise AI Deployment: Large organizations can ensure quality control and compliance when deploying AI solutions across different departments

RAG System Optimization: Teams can evaluate and improve their Retrieval-Augmented Generation systems with continuous testing and performance monitoring

Chatbot Development: Developers can test and refine chatbot responses, ensuring consistent and accurate interactions with users

Pros

Comprehensive evaluation tools with validated metrics

Easy integration with existing workflows through SDKs

Real-time monitoring and feedback capabilities

Cons

May require maintenance downtime for platform updates

Learning curve for teams new to AI evaluation tools

How to Use Scorecard

Create a Scorecard Account: Sign up for a Scorecard account and obtain your API key. Set the API key as an environment variable for authentication.

Create a Project: Create a new project in Scorecard where your tests and runs will be stored. Make note of the Project ID for later use.

Create a Testset: Create a Testset within your project and add Testcases. A Testset is a collection of test scenarios used to evaluate your LLM system's performance.

Define Metrics: Either select from Scorecard's validated metric library or create custom metrics to evaluate your system. Use the metrics.create() method to define evaluation criteria using prompt templates.

Set Up Your LLM System: Implement your LLM system using dictionaries for inputs and outputs as required by Scorecard's interface.

Run Evaluation: Execute your tests by clicking the 'Run Scoring' button in the Scorecard UI or through the API to evaluate your system using the defined metrics.

Monitor Results: Review the evaluation results in the Scorecard UI to understand your system's performance, identify issues, and track improvements.

Continuous Evaluation: Use Scorecard's logging and tracing features to monitor your AI system's performance in real-time and identify areas for improvement.

Iterate and Improve: Based on the insights gained, make improvements to your system and repeat the testing process to validate changes.

Scorecard FAQs

Scorecard is an AI evaluation platform that helps teams test, evaluate, and optimize AI agents. It provides tools for continuous evaluation, prompt management, and performance monitoring of AI models.