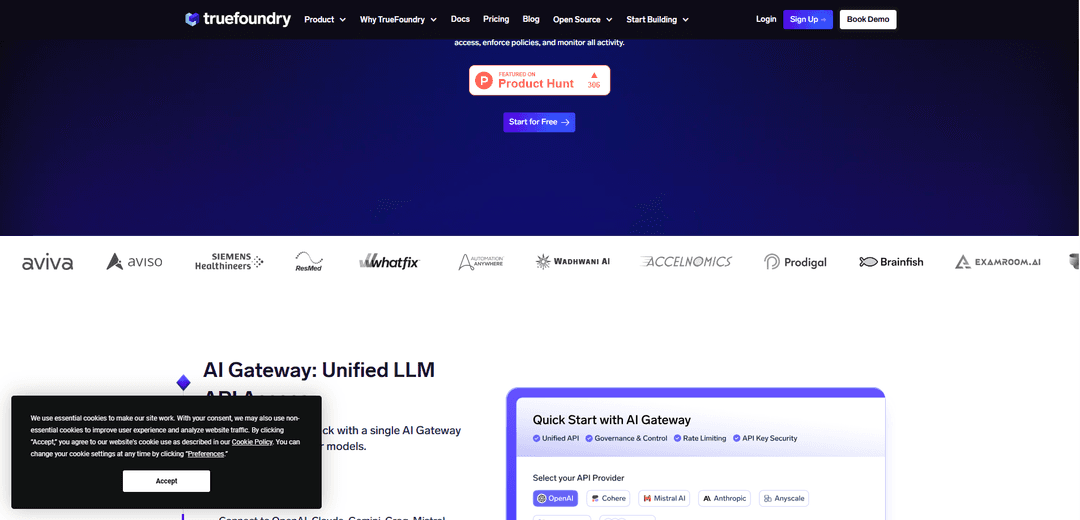

TrueFoundry AI Gateway

O TrueFoundry AI Gateway é um plano de controle de nível empresarial que permite que as organizações implantem, governem e monitorem cargas de trabalho de LLM e Gen-AI por meio de uma API unificada com segurança, observabilidade e recursos de otimização de desempenho integrados.

https://www.truefoundry.com/ai-gateway?ref=producthunt&utm_source=aipure

Informações do Produto

Atualizado:Dec 9, 2025

O que é TrueFoundry AI Gateway

O TrueFoundry AI Gateway serve como uma camada de middleware centralizada que se situa entre aplicações e vários provedores de LLM, atuando como um tradutor e controlador de tráfego para modelos de IA. Ele fornece uma única interface para conectar, gerenciar e monitorar vários provedores de LLM como OpenAI, Claude, Gemini, Groq, Mistral e mais de 250 outros modelos. O gateway lida com necessidades críticas de infraestrutura, incluindo autenticação, roteamento, limitação de taxa, observabilidade e governança - permitindo que as organizações padronizem suas operações de IA, mantendo a segurança e a conformidade.

Principais Recursos do TrueFoundry AI Gateway

O TrueFoundry AI Gateway é uma plataforma de middleware de nível empresarial que fornece acesso unificado a mais de 1000 LLMs com recursos abrangentes de segurança, observabilidade e governança. Ele oferece controle centralizado para gerenciamento de API, roteamento de modelos, rastreamento de custos e monitoramento de desempenho, ao mesmo tempo em que oferece suporte à implantação em VPC, on-premise ou ambientes air-gapped. A plataforma permite que as organizações implementem proteções, apliquem políticas de conformidade e otimizem as operações de IA por meio de recursos como balanceamento de carga, mecanismos de failover e análises detalhadas.

Acesso e Controle Unificados de Modelos: Ponto de extremidade de API único para acessar mais de 1000 LLMs com gerenciamento de chaves centralizado, limitação de taxa e controles RBAC em vários provedores, incluindo OpenAI, Claude, Gemini e modelos personalizados

Observabilidade Abrangente: Monitoramento em tempo real do uso de tokens, latência, custos e métricas de desempenho com registro em nível de solicitação detalhado e recursos de rastreamento para depuração e otimização

Segurança e Conformidade Avançadas: Proteções integradas para detecção de PII, moderação de conteúdo e aplicação de políticas com suporte para requisitos de conformidade SOC 2, HIPAA e GDPR

Arquitetura de Alto Desempenho: Latência interna abaixo de 3ms com capacidade de lidar com mais de 350 RPS em 1 vCPU, apresentando balanceamento de carga inteligente e mecanismos de failover automático

Casos de Uso do TrueFoundry AI Gateway

Governança de IA Empresarial: Grandes organizações que implementam controle centralizado e monitoramento do uso de IA em várias equipes e aplicativos, garantindo conformidade e gerenciamento de custos

Aplicações de IA para Saúde: Instituições médicas que implantam soluções de IA com conformidade com HIPAA, proteção de PII e requisitos rígidos de governança de dados

Sistemas de Produção Multi-Modelo: Empresas que executam vários modelos de IA em produção e que precisam de gerenciamento, monitoramento e otimização unificados de sua infraestrutura de IA

Desenvolvimento Seguro de Agentes: Organizações que criam agentes de IA que exigem integração segura de ferramentas, gerenciamento rápido e acesso controlado a vários sistemas empresariais

Vantagens

Alto desempenho com baixa latência e excelente escalabilidade

Recursos abrangentes de segurança e conformidade

Recursos ricos de observabilidade e monitoramento

Opções de implantação flexíveis (nuvem, on-prem, air-gapped)

Desvantagens

Pode exigir configuração e configuração significativas para implantação empresarial

Pode ser complexo para organizações menores com necessidades simples de IA

Como Usar o TrueFoundry AI Gateway

Criar conta TrueFoundry: Inscreva-se para uma conta TrueFoundry e gere um Token de Acesso Pessoal (PAT) seguindo as instruções de geração de token

Obter detalhes da configuração do gateway: Obtenha o URL do endpoint do TrueFoundry AI Gateway, o URL base e os nomes dos modelos do trecho de código unificado no seu playground TrueFoundry

Configurar cliente API: Configure o cliente OpenAI para usar o TrueFoundry Gateway configurando o api_key (seu PAT) e o base_url (URL do Gateway) no seu código

Selecionar provedor de modelo: Escolha entre os provedores de modelo disponíveis como OpenAI, Anthropic, Gemini, Groq ou Mistral por meio da API unificada do Gateway

Configurar controles de acesso: Configure limites de taxa, orçamentos e políticas RBAC para equipes e usuários por meio da interface de administração do Gateway

Implementar proteções: Configure verificações de segurança de entrada/saída, controles de PII e regras de conformidade usando a configuração de proteção do Gateway

Habilitar monitoramento: Configure a observabilidade configurando métricas, logs e rastreamentos para rastrear latência, uso de token, custos e desempenho

Testar no Playground: Use a IU interativa do Playground para testar diferentes modelos, prompts e configurações antes de implementar na produção

Implantar na produção: Coloque o Gateway no seu caminho de inferência de produção e direcione o tráfego ao vivo por meio dele enquanto monitora o desempenho

Otimizar e dimensionar: Use a análise do Gateway para otimizar custos, melhorar a latência e dimensionar a infraestrutura com base nos padrões de uso

Perguntas Frequentes do TrueFoundry AI Gateway

O TrueFoundry AI Gateway é uma camada de proxy que se situa entre as aplicações e os fornecedores de LLM/Servidores MCP. Ele fornece acesso unificado a mais de 250 LLMs (incluindo OpenAI, Claude, Gemini, Groq, Mistral) através de uma única API, centraliza a gestão de chaves de API, permite a observabilidade do uso de tokens e métricas de desempenho e impõe políticas de governança. Ele suporta tipos de modelos de chat, conclusão, incorporação e reclassificação, garantindo uma latência interna inferior a 3ms.

Vídeo do TrueFoundry AI Gateway

Artigos Populares

Ferramentas de IA Mais Populares de 2025 | Atualização de 2026 da AIPURE

Feb 10, 2026

Moltbook AI: A Primeira Rede Social de Agentes de IA Pura de 2026

Feb 5, 2026

ThumbnailCreator: A Ferramenta de IA Que Resolve o Estresse das Suas Miniaturas do YouTube (2026)

Jan 16, 2026

Óculos Inteligentes com IA 2026: Uma Perspectiva de Software em Primeiro Lugar no Mercado de IA Vestível

Jan 7, 2026