Agenta

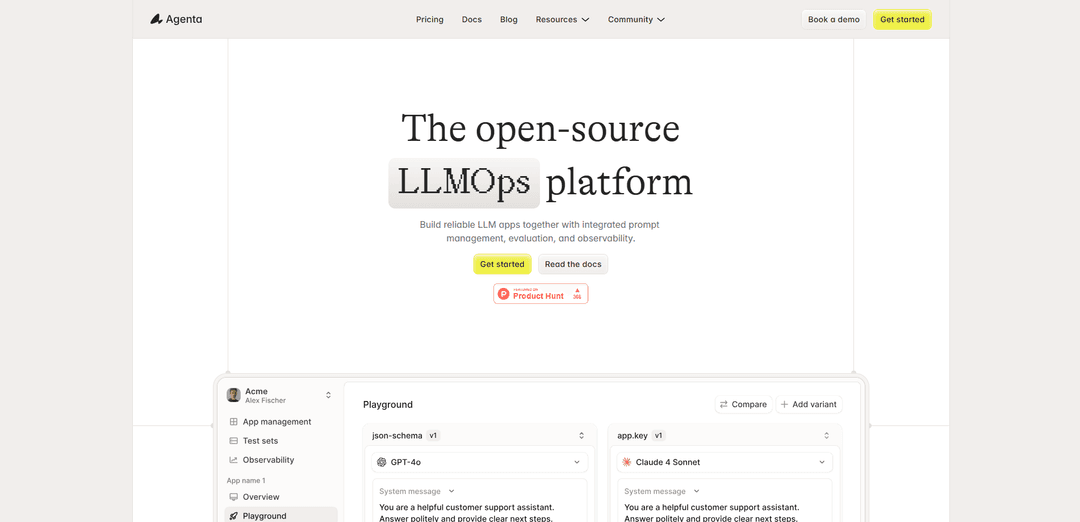

Agenta is an open-source LLMOps platform that provides integrated tools for prompt engineering, evaluation, debugging, and monitoring to help developers and product teams build reliable LLM applications.

https://www.agenta.ai/?ref=producthunt

Product Information

Updated:Dec 9, 2025

What is Agenta

Agenta is a comprehensive end-to-end platform designed to streamline the development and deployment of Large Language Model (LLM) applications. It serves as a unified workspace where both technical and non-technical team members can collaborate effectively in building AI applications. The platform bridges the gap between scattered workflows and structured processes by providing essential infrastructure and tools that follow LLMOps best practices, making it easier for teams to manage prompts, conduct evaluations, and monitor their LLM applications in one centralized location.

Key Features of Agenta

Agenta is an open-source LLMOps platform that provides comprehensive tools for building, evaluating, and deploying LLM applications. It offers integrated prompt management, versioning, evaluation, and observability capabilities, enabling teams to streamline their LLM development workflow. The platform supports various LLM frameworks like Langchain and LlamaIndex, works with any model provider, and facilitates collaboration between technical and non-technical team members through a unified interface.

Unified Prompt Management: Centralized platform for storing, versioning, and managing prompts with side-by-side comparison capabilities and complete version history tracking

Systematic Evaluation Framework: Comprehensive evaluation tools including automated testing, LLM-as-a-judge functionality, and human feedback integration for quality assessment

Advanced Observability: Real-time monitoring and tracing of LLM applications with detailed insights into costs, latency, and performance metrics

Collaborative Interface: User-friendly UI that enables both technical and non-technical team members to participate in prompt engineering and evaluation without touching code

Use Cases of Agenta

AI Application Development: Teams building LLM-powered applications can use Agenta to streamline development, testing, and deployment processes

Quality Assurance: Organizations can implement systematic testing and evaluation of AI outputs to ensure consistency and reliability

Cross-functional Collaboration: Product teams and domain experts can work together with developers to optimize AI applications through shared workflows and tools

Pros

Open-source and framework-agnostic

Comprehensive end-to-end LLMOps solution

Strong collaboration features for cross-functional teams

Cons

Limited documentation for advanced features

Requires initial technical setup and integration

How to Use Agenta

Sign up/Access: Access Agenta either through Agenta Cloud (easiest way to get started) or set it up locally at http://localhost

Project Setup: Create a new project and integrate Agenta with your existing LLM application codebase (supports LangChain, LlamaIndex, OpenAI and other frameworks)

Prompt Engineering: Use the unified playground to experiment with and compare different prompts and models side-by-side. Test prompts interactively to refine and optimize outputs

Version Control: Track changes and version your prompts using Agenta's version control system. Keep a complete history of prompt iterations and changes

Create Test Sets: Build test sets from production errors or edge cases. Save problematic outputs to test sets that can be used in the playground for debugging

Set Up Evaluations: Create systematic evaluation processes using built-in evaluators, LLM-as-judge, or custom code evaluators to validate changes and track results

Configure Monitoring: Set up tracing and observability to monitor your LLM application in production. Track usage patterns and detect performance issues

Team Collaboration: Invite team members (developers, product managers, domain experts) to collaborate through the UI for experimenting with prompts and running evaluations

Deploy Changes: Deploy approved prompt changes and configurations to production via UI, CLI, or GitHub workflows

Continuous Improvement: Use the feedback loop to continuously improve by turning traces into tests, gathering user feedback, and monitoring live performance

Agenta FAQs

Agenta is an open-source LLMOps platform that provides infrastructure for LLM development teams, offering integrated prompt management, evaluation, and observability tools to help teams build reliable LLM applications.