What is Gen-3 Alpha?

Gen-3 Alpha is a groundbreaking AI model developed by Runway, a leading company in the AI and creative technology space. Built on top of Runway's foundational research, Gen-3 Alpha represents a significant leap forward in generative AI capabilities, particularly in the realm of high-fidelity content creation. This model allows users to generate complex, hyper-realistic visuals and videos with unprecedented speed and control, making it an invaluable tool for artists, filmmakers, and content creators.

Key features of Gen-3 Alpha include its ability to rapidly explore endless variations of any idea, scene, or story. Whether it's changing locations, tweaking lighting, or recasting characters, Gen-3 Alpha bridges the gap between concept and execution seamlessly. Additionally, the model excels in generating production-ready assets, from physics-based simulations to detailed renders, all with a level of fidelity and control that was previously unattainable.

With Gen-3 Alpha, Runway continues to push the boundaries of what AI can achieve in the creative industries, offering a powerful toolset for human imagination.

Features of Gen-3 Alpha

Gen-3 Alpha is a groundbreaking video generation model developed by Runway, offering significant improvements in fidelity, consistency, and motion over its predecessor, Gen-2. This model is designed to power various creative tools, including Text to Video, Image to Video, and Text to Image, with enhanced control modes like Motion Brush and Advanced Camera Controls. Gen-3 Alpha is a step towards building General World Models, aiming to simulate the world with greater accuracy and detail.

Key Features:

- High-Fidelity Output: Gen-3 Alpha excels in producing high-quality video content with improved resolution and detail. This ensures that the generated videos are more realistic and visually appealing.

- Consistent Motion: The model maintains a high level of consistency in motion across the generated videos. This feature is crucial for creating smooth and coherent animations, enhancing the overall quality of the output.

- Advanced Prompt Understanding: Gen-3 Alpha demonstrates a superior understanding of user prompts, allowing for more intuitive and effective generation of desired content. This makes the model highly responsive to detailed and specific instructions.

- Multi-Motion Brush: This feature allows users to apply specific motion and direction to up to five subjects or areas within a scene. It provides fine-grained control over the animation, enabling more creative and dynamic video outputs.

- Camera Control: Users can manipulate the camera's movement with intention by selecting direction and intensity. This feature adds a cinematic touch to the generated videos, making them more engaging and professional.

- Customization and Fine-Tuning: Runway collaborates with leading entertainment and media organizations to create proprietary fine-tuned versions of Gen-3 Alpha. This customization allows for greater control and consistency in specific styles, characters, and narrative requirements.

How does Gen-3 Alpha work?

Gen-3 Alpha, a groundbreaking model by Runway, revolutionizes video generation with its advanced AI capabilities. This model excels in creating high-fidelity, consistent, and dynamic videos from text or image inputs, offering significant improvements over its predecessors. Users can generate detailed videos by inputting descriptive text prompts, specifying elements like subject, scene, lighting, and camera movements. Gen-3 Alpha's intuitive understanding of prompts ensures more accurate and creative outputs.

In the industry, Gen-3 Alpha is a game-changer for content creators, filmmakers, and advertisers. It allows for rapid ideation and exploration of video concepts, enabling users to visualize and iterate on ideas quickly. The model's ability to handle complex scene transitions and cinematic styles makes it ideal for producing high-quality visual effects, promotional videos, and even short films. Additionally, Gen-3 Alpha's integration with Multi-Motion Brush and Camera Control features provides fine-tuned control over motion and camera direction, enhancing the creative process and output quality.

Overall, Gen-3 Alpha empowers professionals to push the boundaries of creativity and efficiency in video production.

Benefits of Gen-3 Alpha

Gen-3 Alpha, the latest innovation in video generation from Runway, offers a multitude of benefits that elevate the creative process. This advanced model significantly improves fidelity, consistency, and motion over its predecessors, making it a powerful tool for artists, filmmakers, and content creators.

One of the standout features of Gen-3 Alpha is its ability to generate highly detailed and expressive human characters, unlocking new storytelling possibilities. The model excels at interpreting a wide range of styles and cinematic terminology, allowing for more intuitive and effective generation of hyper-realistic output.

Additionally, Gen-3 Alpha provides fine-grained temporal control, enabling imaginative transitions and precise key-framing of elements in the scene. This level of control is crucial for creating seamless and dynamic video content.

For those on Unlimited Plans, Explore Mode allows for unlimited use of Gen-3 Alpha, making it an invaluable resource for iterative exploration and experimentation. Whether you're crafting a narrative, designing visual effects, or simply exploring new creative avenues, Gen-3 Alpha offers the tools and flexibility to bring your vision to life.

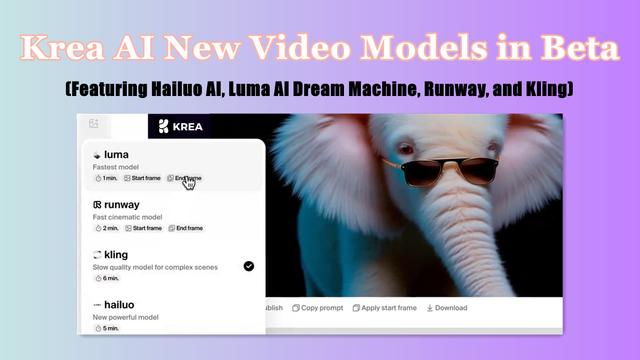

Alternatives of Gen-3 Alpha

While Runway Gen-3 Alpha is a powerful tool for generative AI, there are several alternatives that offer similar capabilities. Here are three notable options:

- Sora AI: Overview: Developed by OpenAI, Sora AI is another advanced text-to-video generative model. It uses diffusion model techniques and transformer architecture to create high-quality, dynamic videos. Key Features: Sora excels in generating long-form videos with intricate details, showcasing a deep understanding of lighting, physics, and camera work. It also includes robust safety protocols to prevent misuse. Use Cases: Ideal for creating detailed and dynamic visual stories, suitable for long-form content and complex scene generation.

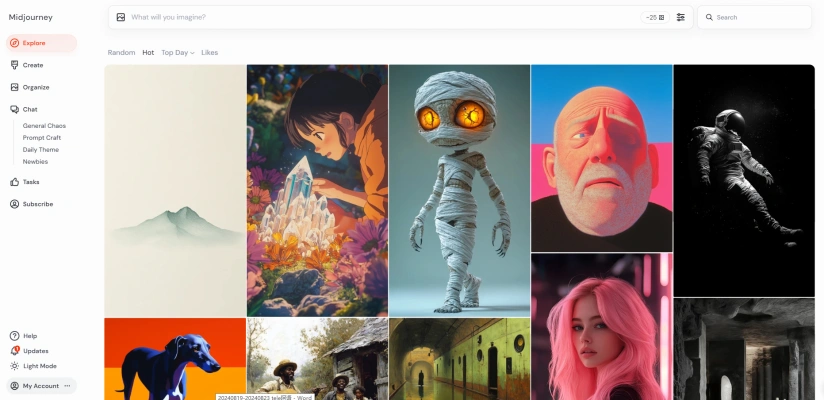

- Midjourney: Overview: Midjourney is a generative AI tool focused on creating high-quality images and videos from text prompts. It uses diffusion models to refine images from noise iteratively. Key Features: Midjourney is known for its ability to produce sharp and detailed visuals. It supports various styles and can handle complex visual requirements. Use Cases: Useful for artists and content creators looking to explore diverse concepts and styles, particularly in image and short video generation.

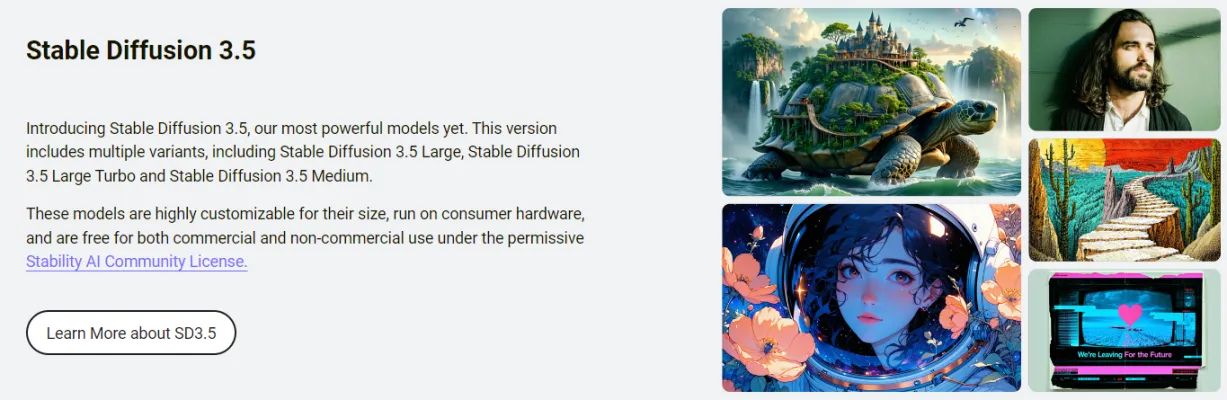

- Stable Diffusion: Overview: Stable Diffusion is a text-to-image model that has been extended to support video generation. It uses advanced algorithms to produce realistic and consistent visuals. Key Features: Stable Diffusion excels in maintaining temporal consistency, ensuring that elements remain stable throughout the video. It also offers fine-grained control over video generation. Use Cases: Suitable for detailed and realistic video content, such as filmmaking, gaming, and advertising industries.

Each of these alternatives offers unique features and capabilities, making them valuable tools in the realm of generative AI for video creation.

In conclusion, Gen-3 Alpha represents a significant advancement in AI-powered video generation. Its high-fidelity output, consistent motion, and advanced control features make it a standout tool for creative professionals. While alternatives like Sora AI, Midjourney, and Stable Diffusion offer compelling options, Gen-3 Alpha's combination of features and ease of use positions it as a leading choice in the field. As AI continues to evolve, tools like Gen-3 Alpha are set to redefine the boundaries of creative expression and efficiency in video production.