Meta Llama 3.3 70B

Meta's Llama 3.3 70B is a state-of-the-art language model that delivers performance comparable to the larger Llama 3.1 405B model but at one-fifth the computational cost, making high-quality AI more accessible.

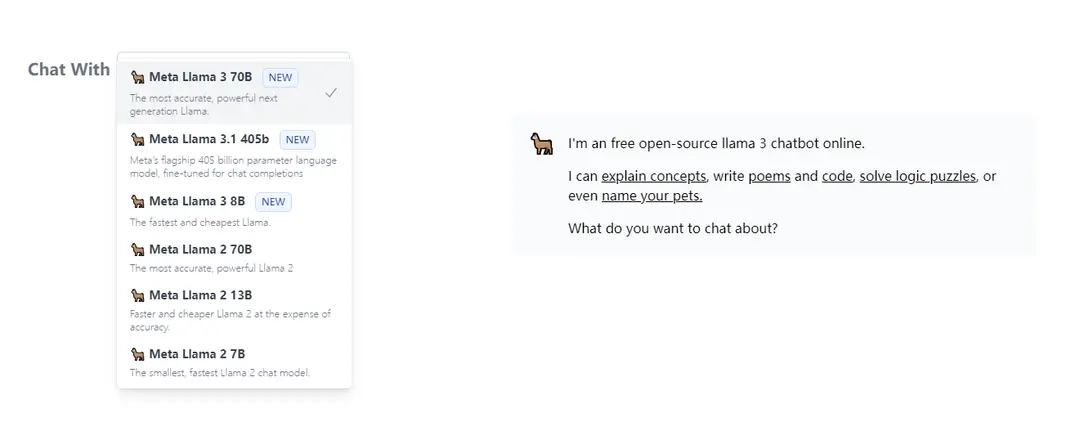

https://llama3.dev/

Product Information

Updated:Jul 16, 2025

What is Meta Llama 3.3 70B

Meta Llama 3.3 70B is the latest iteration in Meta's Llama family of large language models, released as their final model for 2024. Following Llama 3.1 (8B, 70B, 405B) and Llama 3.2 (multimodal variants), this text-only 70B parameter model represents a significant advancement in efficient AI model design. It maintains the high performance standards of its larger predecessor while dramatically reducing the hardware requirements, making it more practical for widespread deployment.

Key Features of Meta Llama 3.3 70B

Meta Llama 3.3 70B is a breakthrough large language model that delivers performance comparable to the much larger Llama 3.1 405B model but at one-fifth the size and computational cost. It leverages advanced post-training techniques and optimized architecture to achieve state-of-the-art results across reasoning, math, and general knowledge tasks while maintaining high efficiency and accessibility for developers.

Efficient Performance: Achieves performance metrics similar to Llama 3.1 405B while using only 70B parameters, making it significantly more resource-efficient

Advanced Benchmarks: Scores 86.0 on MMLU Chat (0-shot, CoT) and 77.3 on BFCL v2 (0-shot), demonstrating strong capabilities in general knowledge and tool use tasks

Cost-Effective Inference: Offers token generation costs as low as $0.01 per million tokens, making it highly economical for production deployments

Multilingual Support: Supports multiple languages with the ability to be fine-tuned for additional languages while maintaining safety and responsibility

Use Cases of Meta Llama 3.3 70B

Document Processing: Effective for document summarization and analysis across multiple languages, as demonstrated by successful Japanese document processing implementations

AI Application Development: Ideal for developers building text-based applications requiring high-quality language processing without excessive computational resources

Research and Analysis: Suitable for academic and scientific research requiring advanced reasoning and knowledge processing capabilities

Pros

Significantly reduced computational requirements compared to larger models

Comparable performance to much larger models

Cost-effective for production deployment

Cons

Still requires substantial computational resources (though less than 405B model)

Some performance gaps compared to Llama 3.1 405B in specific tasks

How to Use Meta Llama 3.3 70B

Get Access: Fill out the access request form on HuggingFace to get access to the gated repository for Llama 3.3 70B. Generate a HuggingFace READ token which is free to create.

Install Dependencies: Install the required dependencies including transformers library and PyTorch

Load the Model: Import and load the model using the following code:

import transformers

import torch

model_id = 'meta-llama/Llama-3.3-70B-Instruct'

pipeline = transformers.pipeline('text-generation', model=model_id, model_kwargs={'torch_dtype': torch.bfloat16}, device_map='auto')

Format Input Messages: Structure your input messages as a list of dictionaries with 'role' and 'content' keys. For example:

messages = [

{'role': 'system', 'content': 'You are a helpful assistant'},

{'role': 'user', 'content': 'Your question here'}

]

Generate Output: Generate text by passing messages to the pipeline:

outputs = pipeline(messages, max_new_tokens=256)

print(outputs[0]['generated_text'])

Hardware Requirements: Ensure you have adequate GPU memory. The model requires significantly less computational resources compared to Llama 3.1 405B while delivering similar performance.

Follow Usage Policy: Comply with Meta's Acceptable Use Policy available at https://www.llama.com/llama3_3/use-policy and ensure usage adheres to applicable laws and regulations

Meta Llama 3.3 70B FAQs

Meta Llama 3.3 70B is a pretrained and instruction-tuned generative large language model (LLM) created by Meta AI. It's a multilingual model that can process and generate text.

Official Posts

Loading...Related Articles

Analytics of Meta Llama 3.3 70B Website

Meta Llama 3.3 70B Traffic & Rankings

0

Monthly Visits

-

Global Rank

-

Category Rank

Traffic Trends: Jul 2024-Jun 2025

Meta Llama 3.3 70B User Insights

-

Avg. Visit Duration

0

Pages Per Visit

0%

User Bounce Rate

Top Regions of Meta Llama 3.3 70B

Others: 100%