The O3 model from OpenAI is designed to tackle complex queries efficiently while maintaining high accuracy. With internal safety testing currently underway, the anticipated public release is expected in early 2025.

Introduction to OpenAI's o3 and o3 Mini models

The introduction of OpenAI's o3 and o3 Mini models signifies a major leap forward in AI technology. The o3 model is a successor to the previously launched o1 reasoning model and is engineered to handle intricate tasks that require sophisticated reasoning. By enhancing their capabilities, OpenAI aims to redefine how AI interacts with complex problems in fields like coding, mathematics, and scientific research.

Key Features of OpenAI's o3 and o3 Mini

Advanced Reasoning Capabilities

The o3 model is built on a foundation of enhanced reasoning abilities, allowing it to process information more thoughtfully compared to its predecessors. It employs a deliberative approach that enables it to generate answers through a step-by-step analysis.

- 71.7% accuracy on the SWE-bench coding benchmark

- 2727 points on the Codeforces programming challenge

- 96.7% accuracy on mathematical reasoning tests like AIME 2024

These scores indicate that the OpenAI o3 models are capable of outperforming human-level reasoning in several areas, making them robust tools for developers and researchers alike.

Cost Efficiency with OpenAI's o3 Mini

The o3 Mini model offers a more cost-effective alternative without sacrificing performance. It features adaptive reasoning levels that allow users to choose between low, medium, or high effort based on task complexity. This flexibility makes it suitable for various applications, from routine tasks to high-stakes problem-solving scenarios.

- Low-effort mode for speed in simpler tasks

- High-effort mode matching the capabilities of the full o3 model at a lower cost

This adaptability ensures that both the o3 model and o3 Mini cater to diverse user needs while optimizing resource usage.

Performance Benchmarks: Setting New Standards

Both models have set new benchmarks in AI evaluation:

- The o3 model scored an unprecedented 87.5% on the ARC AGI benchmark, which tests an AI's ability to reason without relying solely on pre-trained knowledge.

- In scientific assessments like GPQA Diamond, the OpenAI o3 achieved 87.7% accuracy, showcasing its ability to tackle PhD-level questions.

These performances highlight the models' capacity to handle complex tasks with exceptional accuracy and efficiency.

Commitment to Safety and Ethical Deployment

OpenAI is committed to ensuring the responsible deployment of its technologies. Both the o3 model and o3 Mini are undergoing rigorous internal safety testing before being made available to the public. This cautious approach reflects OpenAI's dedication to aligning advanced AI systems with human values and societal benefits.

The company has also implemented “deliberative alignment” strategies aimed at enhancing safety and inviting community feedback during the testing phase. This engagement is crucial for shaping the future landscape of AI deployment as OpenAI continues its journey towards achieving AGI (Artificial General Intelligence). As competition intensifies in the AI sector—evidenced by recent advancements from other tech giants—OpenAI’s focus on developing reliable and ethical AI tools positions it as a leader in the industry.

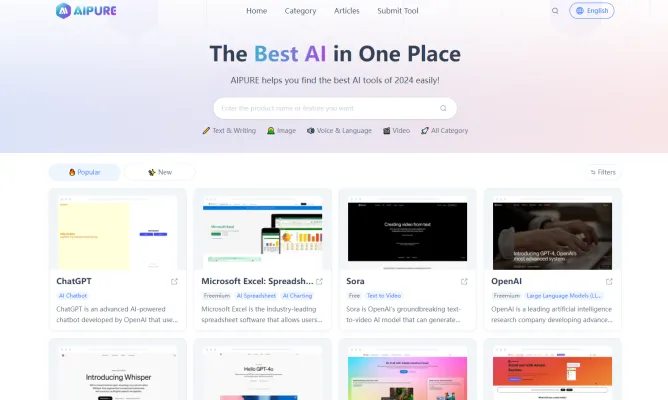

For those eager to explore these groundbreaking developments further or discover additional AI tools, visit AIPURE for more insights into the evolving world of artificial intelligence.