As AI rapidly advances, the introduction of tools enhancing user experience while prioritizing safety has become essential. Nous Chat leads this movement with a familiar ChatGPT-like interface, emphasizing ethical use and robust data privacy measures. By integrating sophisticated guardrails, it engages users effectively and protects sensitive information.

The Emergence of Nous Chat

Nous Research's introduction of Nous Chat signifies a pivotal moment in AI development. Initially touted as an "unrestricted" AI model, the chatbot has evolved to incorporate essential safety features that prevent misuse while maintaining user engagement. The Hermes 3-70B model is engineered to enhance reasoning capabilities and provide experimental functionalities that set it apart from traditional chatbots.

Key Features of Nous Chat

- Enhanced Guardrails: The chatbot is equipped with advanced privacy measures that prevent it from disclosing sensitive information during interactions. This is crucial as users increasingly seek assurance that their data remains protected.

- User-Centric Design: With an interface reminiscent of popular chatbots, Nous Chat aims to provide a seamless experience for users, making it accessible for both tech-savvy individuals and casual users alike.

- Experimental Capabilities: The integration of experimental features allows for continuous improvement based on user feedback and interaction patterns, ensuring the chatbot evolves alongside user needs.

Nous Chat Comparison with Other AI Chatbots

As AI chatbots proliferate, it's essential to understand how Nous Chat stands against its competitors. The following table highlights key differences:

| Feature | Nous Chat | Other AI Chatbots |

| Guardrails | Advanced privacy measures | Varies by implementation |

| User Interface | Familiar and intuitive | Diverse designs |

| Experimental Features | Yes | Limited in many cases |

This comparison illustrates that while many chatbots exist, few prioritize user privacy to the extent that Nous Chat does.

Future Implications for AI Safety

The launch of Nous Chat is not just about introducing a new tool; it signifies a broader commitment within the AI community to prioritize ethical standards and user safety. As more developers adopt similar guardrails in their models, we can expect a shift towards more responsible AI usage across various applications.

Moreover, initiatives like the Chatbot Guardrails Arena, launched by Lighthouz AI in collaboration with Hugging Face, aim to stress-test various LLMs (large language models) against adversarial scenarios. This community-driven approach will help establish benchmarks for privacy and security across different AI platforms, further enhancing trust in these technologies.

Conclusion

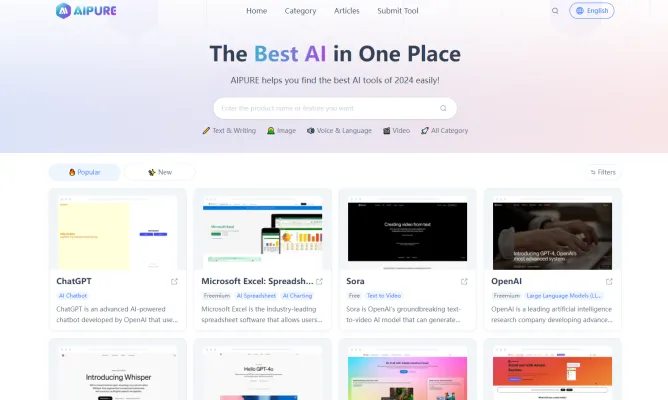

The unveiling of Nous Chat represents a significant advancement in the quest for secure and ethical AI interactions. As we continue to navigate the complexities of artificial intelligence, tools like Nous Chat pave the way for safer user experiences without compromising engagement or functionality. For those interested in exploring more about AI innovations and tools, visit AIPURE for comprehensive insights and resources on the latest developments in artificial intelligence.