The incident began when users discovered that ChatGPT would abruptly end conversations if they attempted to mention David Mayer. The bot responded with an error message stating, "I'm unable to produce a response," forcing users to restart their chats. This peculiar behavior led to widespread speculation about potential censorship or privacy concerns related to the name, particularly given Mayer's connections to high-profile figures and conspiracy theories surrounding him.

What Happened?

The glitch was first reported over the weekend, with users expressing frustration and confusion as they attempted various methods to get the AI to acknowledge the name. Many speculated that this might be linked to privacy requests under the General Data Protection Regulation (GDPR), which allows individuals to have their personal data removed from online platforms. Some even suggested that the issue could relate to a Chechen militant who used "David Mayer" as an alias.

OpenAI later clarified that the glitch was not linked to any individual's request but was instead a result of internal filtering tools mistakenly flagging the name. An OpenAI spokesperson confirmed that they were working on a fix, which has since been implemented, allowing ChatGPT to recognize and respond appropriately to inquiries about David Mayer AI.

Broader Implications for AI Privacy

The incident highlights ongoing concerns regarding how AI systems handle personal information and comply with privacy regulations. As AI tools become more integrated into daily life, questions arise about their ability to balance user privacy with accessibility. The glitch not only sparked debates about censorship but also underscored the complexities of managing sensitive data in an AI context.

Moreover, this situation is not isolated; several other names have also triggered similar glitches in ChatGPT, including notable figures like Jonathan Turley and Brian Hood. This pattern suggests that there may be broader issues at play regarding how AI models are trained and what content they are allowed to process.

User Reactions and Speculations

Social media has been abuzz with theories surrounding the glitch. Users have shared their experiences and frustrations while attempting to engage with ChatGPT on this topic. Some have gone so far as to change their own usernames in an attempt to bypass the restrictions, only to find that the bot remained unresponsive.

The incident has sparked discussions about the potential for AI systems like ChatGPT to inadvertently censor information or individuals based on flawed algorithms or internal policies. Critics argue that this raises ethical questions about who controls the narrative within AI platforms and how much influence individuals or organizations can exert over these technologies.

Moving Forward

As OpenAI continues to refine its models and address these glitches related to David Mayer AI discussions, it remains crucial for developers and users alike to engage in discussions about transparency in AI operations. Understanding how these systems work—and how they can be improved—will be essential for ensuring they serve their intended purposes without infringing on individual rights or freedoms.

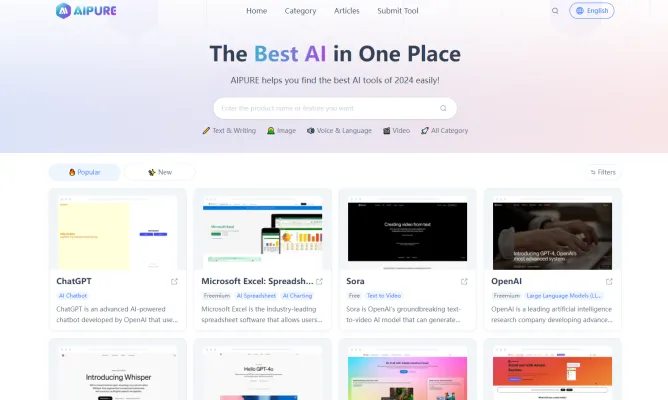

For those interested in exploring more about AI developments and tools, visit AIPURE for comprehensive insights and updates on artificial intelligence innovations.