What is ChatGLM?

ChatGLM is an advanced open-source bilingual language model developed by THUDM, designed to facilitate natural language understanding and generation in both Chinese and English. With 6.2 billion parameters, it leverages the General Language Model (GLM) framework, enabling efficient deployment on consumer-grade graphics cards, thanks to model quantization techniques that require only 6GB of GPU memory at the INT4 level.

Trained on approximately 1 trillion tokens from diverse Chinese and English corpora, ChatGLM excels in conversational tasks, generating responses that align closely with human preferences. The model employs methodologies such as supervised fine-tuning and reinforcement learning from human feedback to enhance its performance in question-answering and dialogue.

ChatGLM offers significant advantages in various applications, including customer service chatbots, virtual assistants, and interactive entertainment. Its flexible architecture allows for easy customization, making it a valuable tool for developers and researchers aiming to create intelligent conversational agents. The model is part of a broader initiative to democratize AI technology, providing accessible solutions for businesses and academia alike.

Features of ChatGLM

ChatGLM boasts several key features that set it apart in the realm of language models:

- Bilingual Capability: ChatGLM's proficiency in both Chinese and English makes it exceptionally versatile for global applications. This feature is particularly valuable in multilingual environments and for businesses operating across different language markets.

- Low Resource Requirements: The model's ability to run on consumer-grade hardware with just 6GB of GPU memory is a game-changer. This accessibility democratizes AI technology, allowing smaller organizations and individual developers to harness the power of advanced language models without significant infrastructure investments.

- Human-like Interaction: Through extensive fine-tuning using supervised learning and reinforcement learning from human feedback, ChatGLM generates responses that closely mimic human conversation. This feature enhances user engagement and satisfaction across various applications.

- Flexible Deployment: The option for local deployment gives users greater control over the model and its applications. This flexibility is crucial for projects requiring data privacy or customized implementations.

- Contextual Understanding: With a context length of 2048 tokens, ChatGLM effectively manages extended dialogues, making it ideal for applications requiring in-depth interactions.

How Does ChatGLM Work?

At its core, ChatGLM utilizes the General Language Model (GLM) architecture with 6.2 billion parameters. This sophisticated design allows the model to process and generate human-like text with remarkable accuracy and contextual relevance.

ChatGLM's strength lies in its ability to maintain coherent dialogues across multiple turns of conversation. This is achieved through its enhanced contextual understanding, which allows the model to generate responses that are not only relevant to the immediate prompt but also consistent with the overall conversation flow.

The model's versatility extends beyond simple text generation. ChatGLM can perform complex tasks such as content summarization, information extraction, and even coding assistance through its integration with CodeGeeX. This makes it a comprehensive AI assistant capable of handling a wide range of applications, from educational tools to software development aids.

Moreover, ChatGLM's ability to autonomously call external tools, such as web browsers and Python interpreters, showcases its potential as a powerful AI agent that can execute complex tasks and provide more comprehensive assistance to users.

Benefits of ChatGLM

The benefits of using ChatGLM are numerous and significant:

- Enhanced User Experience: ChatGLM's contextual understanding and ability to engage in multi-turn dialogues lead to more natural and satisfying user interactions. This is particularly beneficial for applications like customer service chatbots and virtual assistants.

- Scalability and Customization: The model's architecture allows for easy scaling and customization, making it adaptable to various project sizes and specific industry needs.

- Creative Content Generation: ChatGLM's human-like text generation capabilities make it an excellent tool for creative tasks such as storytelling, content creation, and summarization.

- Multilingual Support: With its bilingual capabilities, ChatGLM can serve a global audience, breaking down language barriers in international business and communication.

- Resource Efficiency: The model's ability to run on consumer-grade hardware makes it a cost-effective solution for organizations of all sizes.

Alternatives to ChatGLM

While ChatGLM offers impressive capabilities, several alternatives in the market provide similar or complementary features:

- Perplexity: An AI-powered search engine and conversational assistant that provides direct answers with source citations5.

- Google Gemini: A multimodal AI model from Google that integrates with Google products and offers enhanced problem-solving capabilities34.

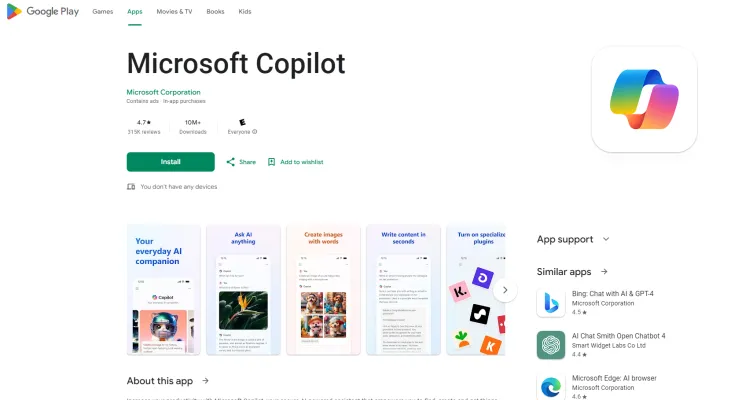

- Microsoft Copilot: An AI-powered assistant that integrates seamlessly with Microsoft 365 applications to boost productivity34.

- DeepSeek LLM: With 67 billion parameters, this model is designed for complex NLP tasks and has been trained on a massive dataset.

- PanGu-Σ: Huawei's trillion-parameter model focuses on natural language processing and understanding, utilizing a unique training approach.

Each of these alternatives offers unique strengths, catering to different needs in the AI and natural language processing landscape.

In conclusion, ChatGLM represents a significant advancement in bilingual AI language models. Its combination of powerful features, efficient resource usage, and versatile applications make it a valuable tool for developers, businesses, and researchers alike. As the field of AI continues to evolve, models like ChatGLM are paving the way for more sophisticated and accessible language processing solutions.